This weekend I’ve been doing some network experimentation on my little DSL connection. I’ve learned a couple of things the hard way so I figured a quick blog post is in order in the hopes that it will save someone else time.

PPP interface errors

Over the last while my Internet connection has been a little slow. I noticed that there were occasionally packet drops but I didn’t take the time to figure out where they were occurring. The testing I was doing this weekend was very sensitive to packet loss so I had to get to the bottom of this.

There were two symptoms. The first was a bunch of log entries like the following.

Apr 19 12:03:21 titan pppoe[26690]: Bad TCP checksum 109c Apr 19 12:10:35 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x0 Apr 19 12:10:35 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x0 Apr 19 12:10:36 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x0 Apr 19 12:10:36 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x0 Apr 19 12:24:50 titan pppoe[26690]: Bad TCP checksum 3821 Apr 19 12:31:54 titan pppoe[26690]: Bad TCP checksum 9aeb Apr 19 12:33:22 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x0 Apr 19 12:33:49 titan pppd[26689]: Protocol-Reject for unsupported protocol 0xb00 Apr 19 12:33:57 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x2fe5 Apr 19 12:33:58 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x0 Apr 19 12:34:01 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x0 Apr 19 12:34:02 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x0 Apr 19 12:34:12 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x58e6 Apr 19 12:34:14 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x0 Apr 19 12:34:17 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x0 Apr 19 12:34:27 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x0 Apr 19 12:34:29 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x0 Apr 19 12:34:30 titan pppd[26689]: Protocol-Reject for unsupported protocol 0xb00 Apr 19 12:34:31 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x800 Apr 19 12:34:33 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x0 Apr 19 12:34:36 titan pppd[26689]: Protocol-Reject for unsupported protocol 0x7768

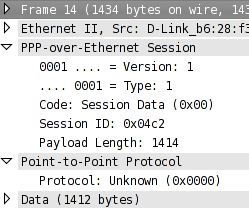

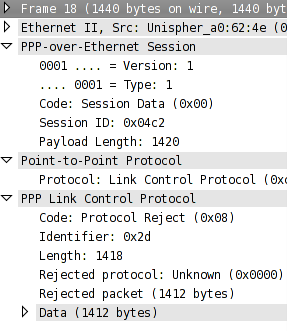

The bad TCP checksum entries hinted at some kind of packet corruption. However, I didn’t know if this was coming from packets being transmitted or received. Since I don’t know the inner workings of PPP as well as I’d like, the Protocol-Reject messages were harder to get a handle on. I grabbed a capture on the Ethernet interface underlying ppp0 so I could look at the PPP messages in Wireshark.

Suspect PPP message

My PPPoE client sent a message with the protocol field set to 0. Wireshark doesn’t know what 0 is supposed to mean.

PPP rejection message

And the remote PPPoE device is sending a message back rejecting the transmitted message. And it’s even nice enough to return the entire payload thereby wasting download bandwidth as well. From this packet capture I became pretty confident that the problem was on my end not the ISP’s. After this I wasted a bunch of time playing around with the clamp TCP MSS PPP option because the data size in the above messages (1412) matched clamp TCP MSS setting in my PPP interface configuration file.

The second symptom was a large number of receive errors on the ppp0 interface – the underlying Ethernet interface did not have any errors. Opposite to the PPP errors above, the receive errors made it look like the problem was in the PPP messages being received by my PPPoE client.

After several unsuccessful theories I finally figured out what the problem was. The PPPoE implementation on Linux has two modes: synchronous and asynchronous. Synchronous mode uses less CPU but requires a fast computer. I guess the P3-450 that I use as a gateway doesn’t qualify as fast because as soon as I switched to the asyncronous mode all of the errors went away.

Fixing the problem was good but this still didn’t make sense to me because I’ve been using this computer as a gateway for years. Then I discovered this Fedora bug. It turns out that Fedora 10 shipped with a version of system-config-network which contained a bug that defaulted all PPPoE connections to synchronous mode. This bug has since been fixed and pushed out to all Fedora users but that didn’t fix the problem for me because the PPP connection configuration was already generated.

In summary, this was a real pain but I did learn more about PPP than I’ve ever had reason to in the past.

Dropping PPP connections

Some of the experimentation I’ve been doing this weekend required completely congesting the upload channel of my DSL connection. I don’t just mean a bunch of TCP uploads; this doesn’t cause any problems. What I was doing is running three copies of the following.

ping -f -s 1450 alpha.coverfire.com

This generates significantly more traffic than my little 768Kbps upload channel can handle. During these tests I noticed that occasionally the PPPoE connection would die and reconnect. Examples of the log entries associated with these events are below.

Apr 19 20:02:31 titan pppd[15627]: No response to 3 echo-requests Apr 19 20:02:31 titan pppd[15627]: Serial link appears to be disconnected.

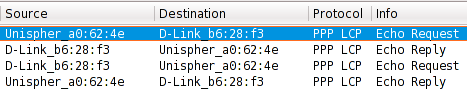

Since I had already been looking at PPP packet captures in Wireshark I recognized the following.

PPP echo

It appears that too much upload traffic causes enough congestion that the PPP echos fail and the PPP connection is dropped after a timeout. I would have thought the PPP daemon would prioritize something like this over upper layer packets but nevertheless this appears to be the case. For the purposes of my testing this problem was easy to avoid by modifying the following lines in /etc/sysconfig/network-scripts/ifcfg-INTERFACE. I increased the failure count from 3 to 10.

LCP_FAILURE=10 LCP_INTERVAL=20