Flow Classifier

The Linux kernel has many different tools for managing traffic. One of them is the flow classifier which allows the user to configure which fields of the packet headers should be used to create a hash which is then used to identify flows and manage them. For example, if the user selects src,dst,proto,proto-src,proto-dst they get a unique value for each flow (within the limits of the hash). Alternatively, using only src as the key will result in all flows being grouped by the source IP address.

The Problem

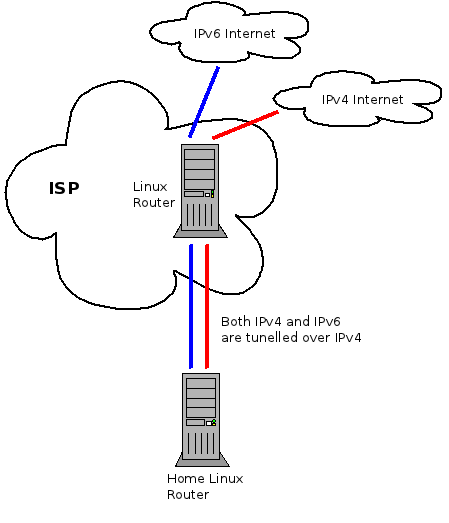

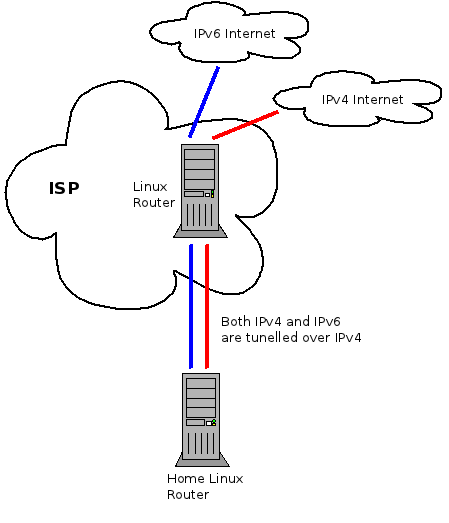

Below is a slightly simplified version of my home network.

Figure 1: Simplified home network

All of the traffic, both IPv4 and IPv6, is tunnelled through a Linux router which lives at my service provider. The reason for this complicated setup is that it gives me control of the traffic in both the upstream and downstream. By shaping the traffic to just below the maximum rate in each direction I am able to avoid Bufferbloat problems and prioritize latency sensitive traffic such as SSH, DNS and Vonage. Especially under load, my QoS scripts make marked difference in how fast the Internet feels.

The multiple tunnels present a problem for implementing my QoS scheme because from the perspective of the underlying interface there are only two flows on the network. One for the IP-IP tunnel and one for the IP-IPv6 tunnel. A work around I used for a while was to apply the QoS rules to the IP-IP tunnel interface because that’s where the bulk of the traffic flows. However, this meant that IPv6 traffic was not properly controlled and any time I had a significant amount of IPv6 traffic I lost all the advantages of my QoS scheme.

To solve this properly I needed a way to look into the tunnels in order to identify the inner network flows. So I’ve extended the flow classifier with the keys in the following table. IP-IP, IP-IPv6, IPv6-IP and IPv6-IPv6 tunnels are supported.

| Key |

Description |

| tunnel-src |

Extract the source IP from the inner header |

| tunnel-dst |

Extract the destination IP from the inner header |

| tunnel-proto |

Extract the protocol from the inner header |

| tunnel-proto-src |

Extract the transport protocol source port from the inner header |

| tunnel-proto-dst |

Extract the transport protocol destination port from the inner header |

Results

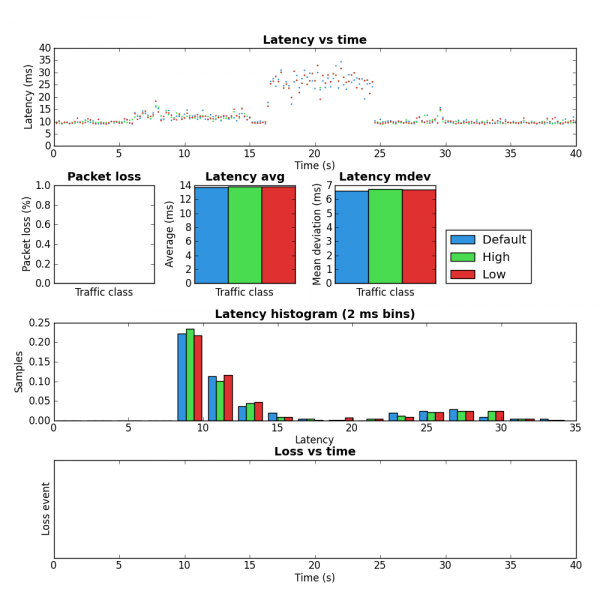

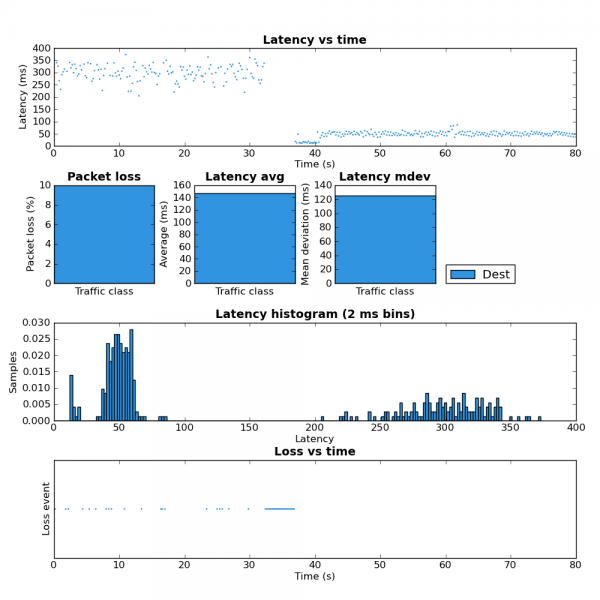

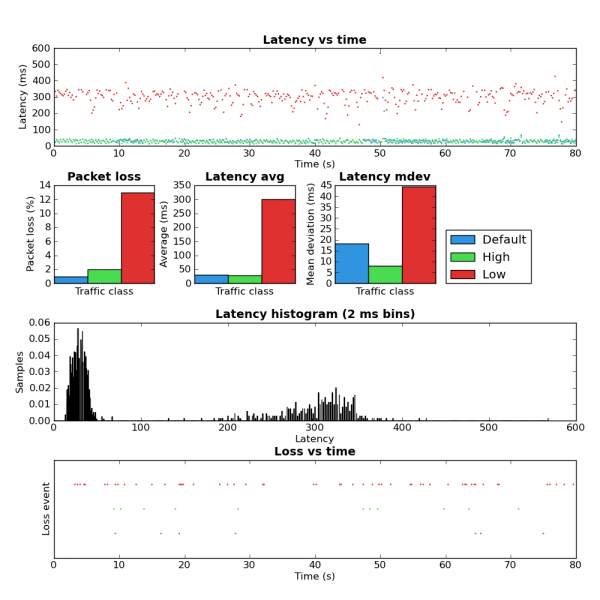

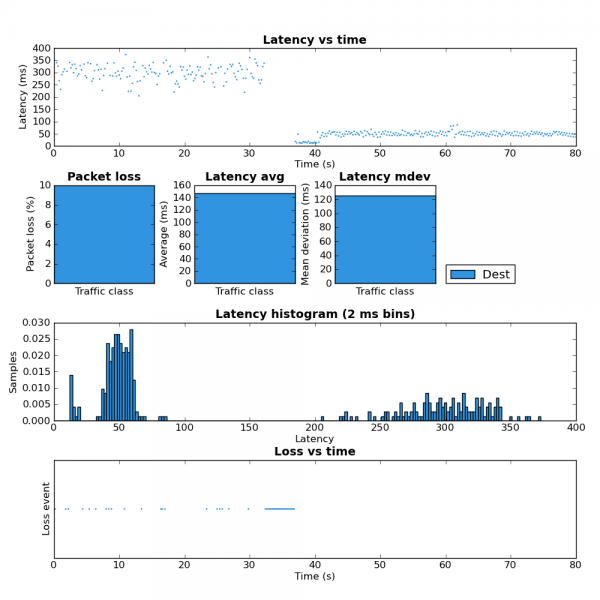

In order to validate that this works I started a couple SCP uploads to max the upstream bandwidth and then ran ping-exp to measure the latency. At the start of the test the flow classifier keys were src,dst,proto,proto-src,proto-dst. Approximately half way through I changed the keys to src,dst,proto,proto-src,proto-dst,tunnel-src,tunnel-dst,tunnel-proto,tunnel-proto-src,tunnel-proto-dst. The advantage of keeping the non-tunnel keys is that any traffic created by the router itself is still classified properly. Here is the tc script I used. You can see the results of this test in the figure 2 below.

Figure 2: Before and after tunnel keys

For the first half of the test you can see the high latency. This is due to all the traffic from the SCP upload and ICMP pings being placed into the same queue because from the perspective of the flow classifier there is only one flow. In the second half of the test the addition of the tunnel keys allows the flow classifier to place the ICMP packets into a different queue which is not affected by the SCP upload and therefore has much lower latency. The large amount of packet loss during the key change is because the script I used creates a large number of queues. While these queues are being created packets are dropped.

While my network setup may be a bit unique I think it’s likely that many home networks will have some form of tunnelling in the near future as tunnels are part of several IPv6 migration strategies. So hopefully this little addition will be useful in many different contexts.

Below are links to the two patches that are required. I’ll post them to Netdev for review shortly.

clsflow-tunnel-20111016.patch

iproute-clsflow-tunnel-20111016.patch